Can we really trust AI with our data?

Blog | Innovation

We trust AI with our data because it seems easy, cheap, and efficient. But while our models keep getting smarter, we’re gradually losing control. Under the CLOUD Act, the U.S. government can demand access to data from companies like Microsoft, Amazon, and Google — even if that data is stored in Europe. What once felt like a convenient choice has become a matter of autonomy and trust.

In this article, I’ll show what organizations can already do today to protect their digital sovereignty, without waiting for Brussels or Big Tech to act.

In a previous article on Frankwatching, I explored the link between data, HR, and privacy, focusing on how organizations handle personal data responsibly. This time, I want to take it a step further: how we, as Europe, can regain control over our digital infrastructure, and why that matters now more than ever.

Our digital autonomy is under pressure. Yet the solutions are closer than they appear. Across Europe, new initiatives are emerging that prove things can be done differently. Fresh players are building technologies based not on dependency, but on transparency, collaboration, and trust.

European AI alternatives are gaining momentum

A new wind is blowing through Europe. Startups like Mistral, Aleph Alpha, and Hugging Face (EU Cloud) are developing AI systems that actually live up to European values: privacy, transparency, and data minimization.

Take Mistral as an example. The company operates within European data centers, is open about its model architecture, and allows organizations to opt out of data reuse — GDPR by design. Still, it’s not a silver bullet. Mistral is young, still relies on some subcontractors outside the EU, and its audits have yet to prove themselves.

The potential is huge, but trust requires time, openness, and European cooperation. And that’s exactly where things are lagging — policy and practice aren’t moving at the same pace.

Between policy and practice: Where do we lose control?

The AI Act, officially adopted in August 2024, aims to bring more transparency, risk assessment, and accountability to AI systems. It’s a vital step — but legislation alone doesn’t change behavior.

Meanwhile, the European Commission is pursuing a dual strategy under the banners Apply AI and AI in Science: accelerating AI adoption in business and public sectors while investing in research, talent, and infrastructure. Europe is trying not just to regulate innovation, but to steer it.

Still, the reality tells a different story. In 2024, around 23% of Dutch companies used one or more AI technologies — almost 60% among large enterprises. New figures show that growth is speeding up: according to Unlocking Europe’s AI Potential – Netherlands Edition 2025 (by EY and The Lisbon Council, with Microsoft), 49% of Dutch companies now use AI — a 26% increase in just one year.

In its report "Focus on AI in the Central Government," the Court of Audit found that many AI systems within the government are still experimental. Only some are fully operational, and for one-third it’s unclear whether they even function properly. The Dutch Data Protection Authority warns of uncontrolled data flows. Vice-chair Monique Verdier summed it up clearly:

“Once data is inside a model, you lose control — it can’t just be taken out again, and the consequences are hard to predict.”

So yes, there’s plenty of policy. But daily practice lags behind. As regulations tighten, many organizations still wrestle with one simple question: Where is our data actually stored — and who can access it?

That’s the heart of the problem. Laws can set direction, but they can’t enforce responsibility.

Waiting for legislation? We can’t afford to

Governments can set the course, but it’s up to organizations themselves to decide where their data lives and who can reach it.

That starts with simple, crucial questions:

Where is our data physically stored?

Which subcontractors or processors do our vendors use?

Can employees object to their data being used in AI applications?

Some Dutch municipalities are already asking these questions out loud. Municipality of Amsterdam, for instance, temporarily banned generative AI tools such as ChatGPT and Copilot until clear guarantees exist for data safety and transparency.

Others are actively exploring European alternatives. According to Computable (2025), more and more public institutions are using Private AI — applications running in controlled environments such as their own data centers or Dutch clouds.

As Eugene Tuijnman (CEO of SLTN) puts it:

“AI is attractive to use, but you don’t want your business data ending up on the public internet. The extent of U.S. government access to our data has been a real wake-up call.”

It’s a clear signal: organizations can’t just wait for regulation. The real question is — do we dare to take control now, or will we let others decide for us?

Private AI refers to AI applications that run in closed, secure environments — for instance, in-house data centers or Dutch cloud systems. This way, organizations keep full control over their data and models, without sharing them externally.

Three possible paths: Which way will Europe go?

While concrete numbers for late 2025 are still scarce, three trends are emerging:

Scenario 1: accelerated adoption AI use explodes, driven by EU innovation funds and public-private collaboration. By the end of 2026, over half of European companies could be actively using AI — especially in knowledge-intensive sectors.

Scenario 2: fragmented growth Some countries — like the Netherlands, Germany, and Finland — surge ahead, while southern and eastern regions lag behind. A “two-speed Europe” emerges, where digital inequality creates new dependencies.

Scenario 3: slowdown due to regulation and costs The AI Act demands audits and documentation, which can weigh heavily on smaller organizations. If compliance becomes too complex or expensive, growth could stall.

Which scenario plays out depends on the choices organizations make now — about their infrastructure, safeguards, and data transparency.

Regaining control starts with collaboration

Digital sovereignty can’t be achieved alone. Companies, governments, and suppliers must work together to build a trustworthy ecosystem.

Here’s where to start:

Choose consciously. Work with vendors who guarantee EU-based data processing, transparent audits, and open lists of subprocessors.

Be transparent. Communicate clearly — internally and externally — about where data is stored, who has access, and for what purpose.

Invest locally. Support European innovation, even if it’s temporarily more expensive or less established than U.S. alternatives.

As former Dutch Minister Robbert Dijkgraaf said:

“To fully seize the opportunities of AI, we must invest in knowledge, infrastructure, and autonomy. Only then can Europe stay competitive and sovereign.”

Digital sovereignty: The true price of trust

Digital sovereignty isn’t about borders — it’s about trust. It means knowing where your data is and who decides over it. It means staying in control of your data — and therefore your future.

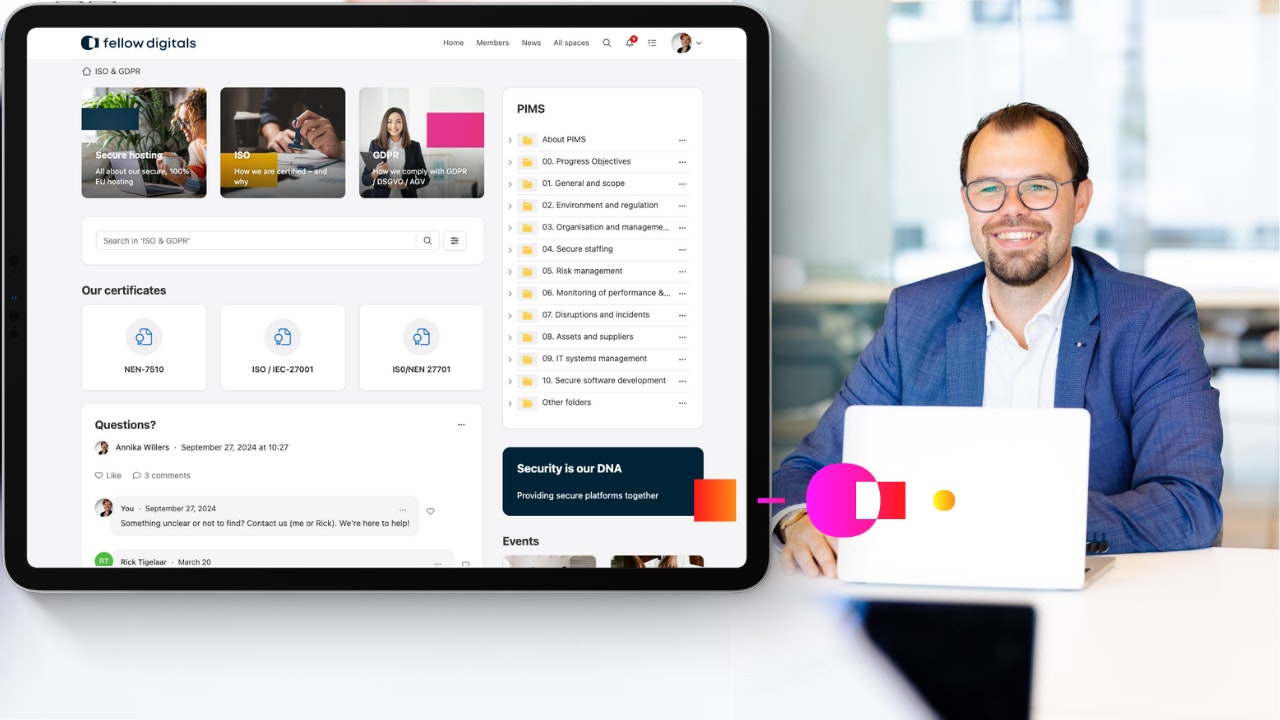

At Fellow Digitals, we take the protection of your data seriously. Our platform is built with privacy and security at its core, ensuring that your information remains under your control. We’re proud to say that we comply with the highest international standards, including ISO 27001, ISO 27701, and NEN 7510, ensuring that our security measures meet the demands of businesses and industries across Europe. We ensure full transparency, data security, and GDPR compliance across our platforms. Our secure, scalable solutions are designed to empower every employee while keeping data privacy and sovereignty at the forefront. As a company, we commit to secure-by-design software, robust authentication protocols, and data hosting within the EU.

Europe is taking steps forward. AI initiatives are growing, but the gap with the U.S. remains wide. In 2025, more than 80% of global AI investment went to U.S. companies; Europe accounted for just 9%. The EU Data Act and investments in European infrastructure bring us closer to autonomy — but policy alone won’t do it.

Every organization that chooses transparency, privacy, and European infrastructure helps rebuild trust in AI. Because once you lose control of your data, you lose control of your future.

The question isn’t if we’ll reclaim that control — but when.

To learn more about how we prioritize data security and safeguard your digital workplace, check out our Security Leaflet. It outlines how Fellow Digitals meets the highest security standards and how you can benefit from our secure, GDPR-compliant solutions.

We love to share our knowledge with you

Related blogs